K8s exposes pods functionality through services. Services in the Kubernetes world are abstractions that describe a set of endpoints that are related together:

- multiple web servers

- multiple mail transfer agents that share the sending load

- multiple applications that expose a functionality

When deploying an application in Kubernetes you have several options to choose from:

- deployments

- useful for when applications don’t need state and the scaling doesn’t need an ordering

- statefulsets

- useful for when applications need state( ex: databases ) and each pod needs its own identity and persistent storage

- daemonsets

- useful when you need applications to run on all nodes of the cluster( ex: for logging )

These applications need to be exposed to other applications or systems inside and outside the cluster:

- inside connection – any node or any pod in the cluster

- outside connection – any system that has a network connection to the cluster( except nodes )

Each application can be accessed inside the cluster using its hostname that is resolved through the cluster DNS or IP, but you want to split the load with the help of services. In the case of deployments it is hard to know what is the hostname or IP in advance.

Kubernetes abstracts 3 types of services:

- ClusterIP

- the ip address is automatically assigned from an address pool that the cluster reserved for this purpose – cannot be accessed from the nodes networks

- can also be manually set

- balances access between pods

- used for accessing the cluster from inside

- NodePort

- allocates a port from a port range( usually 30000 – 32768 ) and each node ( master or worker ) proxies that port

- you need to implement your own load balancing logic between nodes( masters and workers ) and plan for node failing

- balances access between pods inside the service

- used for accessing the cluster from outside

- LoadBalancer

- typically used in cloud environments for which a load balancer implementation, that integrates with Kubernetes, exists

- balances access between nodes

- balances access between pods

- used for accessing the cluster form outside

ClusterIP

Let’s take the following cluster configuration:

sabin@sabin-pc:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane 6d22h v1.27.3

master-2 Ready control-plane 6d22h v1.27.3

master-3 Ready control-plane 6d22h v1.27.3

worker-1 Ready <none> 6d22h v1.27.3

worker-2 Ready <none> 6d22h v1.27.3

worker-3 Ready <none> 141m v1.27.3Let’s add a deployment and define a service for it:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app.kubernetes.io/name: web-server

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

app.kubernetes.io/name: web-server-instance

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: http-web-svc

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: ClusterIP

selector:

app.kubernetes.io/name: web-server-instance

ports:

- name: http-port

protocol: TCP

port: 80

targetPort: http-web-svcWe can list pods and service:

sabin@sabin-pc:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-65f96988fb-8vlbq 1/1 Running 0 3m16s

nginx-deployment-65f96988fb-fs8lj 1/1 Running 0 3m15s

nginx-deployment-65f96988fb-kt2q4 1/1 Running 0 3m15s

sabin@sabin-pc:~$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d23h

nginx-service ClusterIP 10.99.248.132 <none> 80/TCP 2m36s

sabin@sabin-pc:~$ kubectl describe svc nginx-service

Name: nginx-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app.kubernetes.io/name=web-server-instance

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.109.220.13

IPs: 10.109.220.13

Port: http-port 80/TCP

TargetPort: http-web-svc/TCP

Endpoints: 10.244.3.9:80,10.244.4.7:80,10.244.5.20:80

Session Affinity: None

Events: <none>

# go to the master-1 node and try to see if you have access inside the cluster

k8suser@master-1:/tmp$ wget http://10.109.220.13

--2023-07-11 16:13:02-- http://10.109.220.13/

Connecting to 10.109.220.13:80... connected.

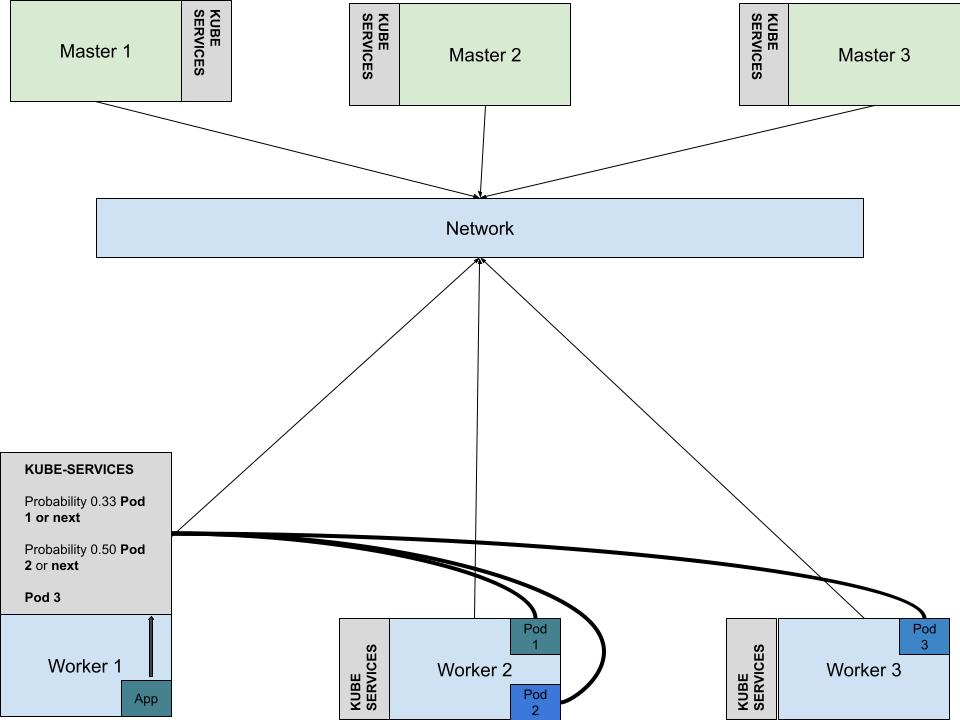

HTTP request sent, awaiting response... 200 OKLet’s see how the “pod balancing” is done inside the cluster. To find this we need to look at the modifications done by the kube-proxy to iptables.

k8suser@master-1:~$ sudo iptables -t nat -S KUBE-SERVICES

-A KUBE-SERVICES -d 10.109.220.13/32 -p tcp -m comment --comment "default/nginx-service:http-port cluster IP" -m tcp --dport 80 -j KUBE-SVC-VSXJECWTN7CAXPNE

# when the destination is 10.109.220.13 the packet processing will jump to KUBE-SVC-VSXJECWTN7CAXPNE chain

k8suser@master-1:~$ sudo iptables -t nat -S KUBE-SVC-VSXJECWTN7CAXPNE

-N KUBE-SVC-VSXJECWTN7CAXPNE

-A KUBE-SVC-VSXJECWTN7CAXPNE ! -s 10.244.0.0/16 -d 10.109.220.13/32 -p tcp -m comment --comment "default/nginx-service:http-port cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.3.9:80" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-5Y4PUVAQQKTVBM67

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.4.7:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-6VDI4YHN7J2ZLURC

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.5.20:80" -j KUBE-SEP-EUATS3YBX36IMXHL

# please note the probability dispatching to endpoints "--mode random --probability"We can see in the following diagram how a packet from an application that wants to access a service in any of the pods 1,2,3 is dispatched with the help of iptables to the right pod IP. Once the pod IP is selected with a random probability( over time this tends to an even load across pods ), the IP packet from the originating pod is sent to the worker node that hosts the destination service pod. You can connect to any of the nodes( no matter the role ) and access the service declared above.

NodePort

To create a NodePort service all we need to change is the service type:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app.kubernetes.io/name: web-server

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

app.kubernetes.io/name: web-server-instance

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: http-web-svc

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

app.kubernetes.io/name: web-server-instance

ports:

- name: http-port

protocol: TCP

port: 80

targetPort: http-web-svc

nodePort: 30007At this point we are able to access the service from outside the cluster:

sabin@sabin-pc:/tmp$ kubectl describe svc nginx-service

Name: nginx-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app.kubernetes.io/name=web-server-instance

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.109.220.13

IPs: 10.109.220.13

Port: http-port 80/TCP

TargetPort: http-web-svc/TCP

NodePort: http-port 30007/TCP

Endpoints: 10.244.3.10:80,10.244.4.8:80,10.244.5.21:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

# access the service through

sabin@sabin-pc:/tmp$ wget http://192.168.0.50:30007

--2023-07-12 11:06:17-- http://192.168.0.50:30007/

Connecting to 192.168.0.50:30007... connected.

HTTP request sent, awaiting response... 200 OKI we look at the way the package forwarding is done inside the cluster we can see that the only difference is the extra rule in the “KUBE-NODEPORTS” chain

k8suser@master-1:~$ sudo iptables -t nat -S KUBE-NODEPORTS

-N KUBE-NODEPORTS

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-service:http-port" -m tcp --dport 30007 -j KUBE-EXT-VSXJECWTN7CAXPNE

k8suser@master-1:~$ sudo iptables -t nat -S KUBE-EXT-VSXJECWTN7CAXPNE

-N KUBE-EXT-VSXJECWTN7CAXPNE

-A KUBE-EXT-VSXJECWTN7CAXPNE -m comment --comment "masquerade traffic for default/nginx-service:http-port external destinations" -j KUBE-MARK-MASQ

-A KUBE-EXT-VSXJECWTN7CAXPNE -j KUBE-SVC-VSXJECWTN7CAXPNE

k8suser@master-1:~$ sudo iptables -t nat -S KUBE-SVC-VSXJECWTN7CAXPNE

-N KUBE-SVC-VSXJECWTN7CAXPNE

-A KUBE-SVC-VSXJECWTN7CAXPNE ! -s 10.244.0.0/16 -d 10.109.220.13/32 -p tcp -m comment --comment "default/nginx-service:http-port cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.3.10:80" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-CEGVYV4MOMDOASW2

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.4.8:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-MJN6QLOUXSXYLL6U

-A KUBE-SVC-VSXJECWTN7CAXPNE -m comment --comment "default/nginx-service:http-port -> 10.244.5.21:80" -j KUBE-SEP-UCTBSKRL3IC7LDUY

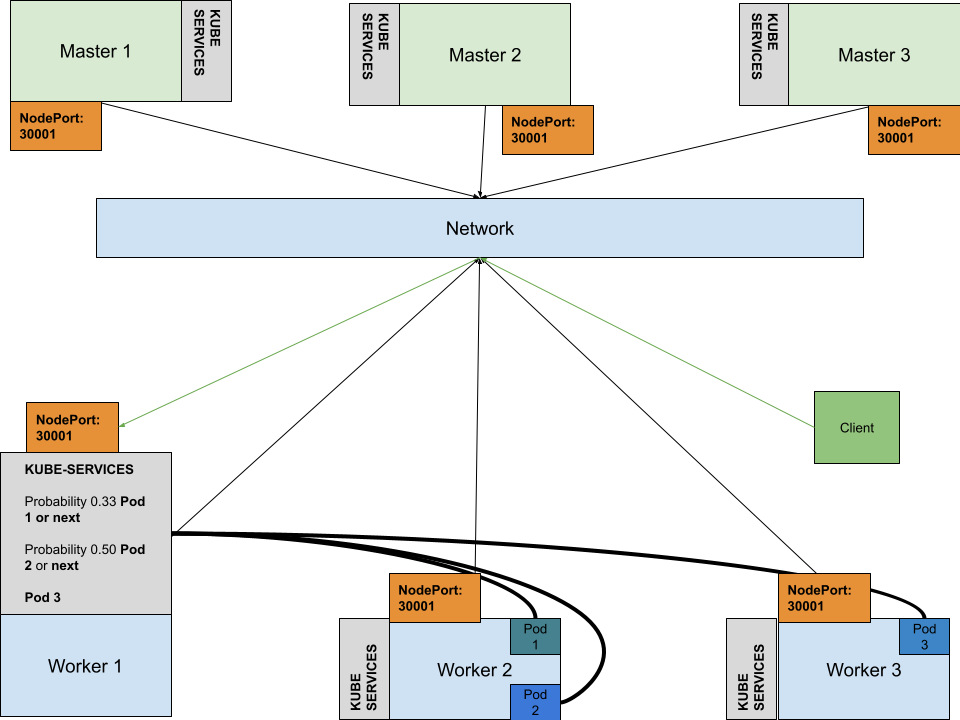

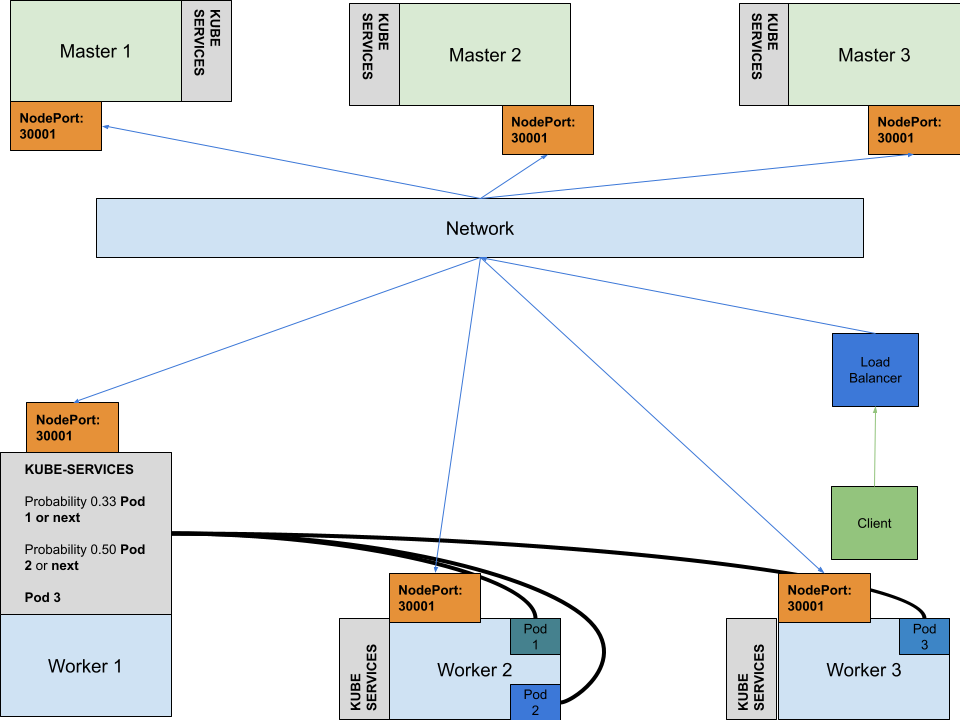

In the diagram you can see how the client connects to a service in the cluster:

- it enters the cluster network and from there it is distributed to one of the cluster nodes( including control nodes )

- a load balancer can be deployed to split the traffic between the nodes

- a simple port forwarding can be setup to point to a specific node

- it hits one of the NodePorts rules in the KUBE-NODEPORTS chain( this is setup by kube-proxy )

- it goes through the same logic as for the ClusterIP service

LoadBalancer

This type of service is mainly specific to cloud environments. It can simply be enabled by setting the type of service to “LoadBalancer”.

In this case the traffic is split by the load balancer that knows the cluster state and can react to changes. If you want to experiment with this type of service or you need a cloud like setup you can use metallb.

Metallb can be deployed in 2 modes:

- layer 2 mode

- one node( master or worker ) takes ownership of the service IP

- the MAC address for the IP is the MAC address of the node the has ownership

- it doesn’t balance node, it balances pods

- if the node goes down, another node takes ownership

- BGP mode

- true load balancing

- need to communicate routers the balanced nodes( nexthops for the service IP )